However, in this chapter I'm coming back all the way to where we started: running Agile sprints. To be more specific: figuring out the status of iterations and adjusting their course.

Sorry, figuring out what?

Just to make sure you're still with me here, let's assume we're halfway into a 3-weekly sprint, and completed a third of the committed user stories. Our goal is getting a good projection of how many will be done when the clock strikes 5 PM next Friday.In case your "What" question has been replaced by "Why":

- Other teams and customers depend on our commitments. If we break those, we at least should at least avoid delivering bad news at the eleventh hour.

- Our own sprint plans depend on what we achieved. If we have to push tasks back at the last moment, then future sprints will exemplify a nice domino effect.

Well, having answered the What and Why (which to be fair, may not have been asked), it's easy to pull out the answer to How. Why don't you use the...

Burndown chart

Here's the thing: more often than not when seeing burndown charts, I heard one of the two variants of this phrase: "It is better/worse than it looks".Of course, the experience might have been different for you, but let me expand a little bit first. There are two ways of burning down sprints: (1) by logging time, (2) by completing tasks.

At the risk of stating the obvious: with the former, the burndown chart goes whenever someone logs time, and with the latter whenever a task gets done.

Logging time - worse than it looks

This is the most natural way of tracking progress; we have estimated a task at 3 days - and we log a day each on Monday, Tuesday and Wednesday.In this idealised world, the burndown chart looks like this:

Unfortunately, the more sprints we put behind us, the less idealistic the world looks.

The crucial point is that I care far more about time remaining rather than time spent. Perhaps someone, somewhere, somehow, repeatedly estimates tasks right and completes them at an even pace, but in reality, it is not unheard of to estimate a task at 3 days and take a week and a half to complete. This is just how software development is - as hard as we try, we can't always avoid surprises.

So, by the time Wednesday turns into Thursday, the metrics look great - 3 days logged. In fact, the situation is dire: we are going to spend another week on the task, and most likely push a ton of stuff into the next sprint.

However, why don't we ask engineers to update time remaining? Surely, they know how much work is still outstanding, and can adjust the estimate accordingly.

Well, they don't always know. If you spent enough time in the industry, you must have heard the dreaded phrase "90% complete" only to discover that the remaining 10% have a life of their own.

This is the reason time logging paints too good a picture. It usually shows how much time is spent on tasks, which does not necessarily help with figuring out the health of a sprint.

Logging tasks - better than it looks

A more traditional school of thought is burning the sprint down only when tasks get completed.

This has a strong statement behind it: I do not care whether 80% or 67.5% of a task is complete; I only care when its acceptance criteria are ticked of, and it is declared as done.

It's definitely more honest, but it does have a couple of weaknesses:

a) It does not cope well with large stories. There's no insight from the burndown chart on how they're getting on, and the longer the story is, the more opaque it becomes. In worst cases, we end up getting the sordid picture below:

b) It is susceptible to minor roadblocks. E.g. a defect is solved, committed, and ready to be validated, but there is no environment to validate it on; in the meantime, the burndown chart flatlines.

However, honesty trumps visibility, while, on the other hand, these weaknesses can be overcome.

For starters, there are many other good reasons to avoid large tasks. They usually are a symptom of planning gaps, so one could argue that with proper homework, we don't need to worry about tracking those, and problem a does not exist.

As for minor roadblocks: there is a variety of tactics for getting them out from the way, but no single recipe. I usually prefer constructing stories with as few external dependencies as possible exactly for tracking purposes.

For starters, there are many other good reasons to avoid large tasks. They usually are a symptom of planning gaps, so one could argue that with proper homework, we don't need to worry about tracking those, and problem a does not exist.

As for minor roadblocks: there is a variety of tactics for getting them out from the way, but no single recipe. I usually prefer constructing stories with as few external dependencies as possible exactly for tracking purposes.

Thus, with everything taken into account, the only benefit from logging time is retrospectives; i.e. figuring out where we deviated from the original estimate. From my experience, it is hardly worth all the trouble, especially as overspend usually already manifests itself indirectly via tasks delayed to the next sprint.

Stories versus sub-tasks

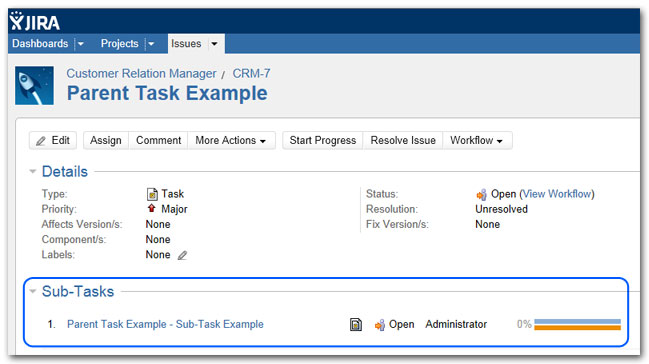

Just a bit of mechanics before wrapping up. All Scrum tools provide several layers of tasks:- Epics (which I talked about in the past)

- Stories

- Subtasks

Subtasks differ from stories in three ways:

- They are created and owned by the engineer, as opposed to the Product Owner

- Acceptance criteria on them are optional

- They must be a part of a bigger story.

For example, if our story is "Create a REST-ful service for data provisioning", its sub-tasks might be:

- Define a mock API

- Create auto-tests to drive the mock API (let's assume we are doing TDD)

- Implement call A

- Implement call B

Subtasks look perfectly suited for getting fine-grained units in place to drive burndown charts and proper planning. They are owned by the developer (so, Product Owner does not have to deal with umpteen user stories), and they are fine-grained by definition.

Unfortunately, I'm yet to find a tool which burns down on subtask completion. The usual reasoning is that nobody apart from the engineer should care about internal tasks being done.

My personal take is that others do care, and having those reflected in charts makes sprints less opaque, but we have to work with what we've got.

In short, subtasks look great in theory, but in practice they are impossible to track, and with time I ended up reverting to stories throughout.

To tame a chart

Getting the burndown graph to reflect reality is equally hard and important. The main recipe is having small tasks, and ticking things off only when they are done, not when they are complete. Subtask is the unfortunate victim of this approach, as Scrum tools do not reflect them in reports.

No comments :

Post a Comment